It is often required to extract all the CSS and JavaScript files from the webpage so that you can list out all the external and internal styling and scripting performed on the webpage.

In this tutorial, we will walk you through code that will extract JavaScript and CSS files from web pages in Python.

A webpage is a collection of HTML, CSS, and JavaScript code. When a webpage is loaded in the browser, the browser parses the complete HTML file along with CSS and JavaScript files and executes them.

The webpage can have multiple CSS and JavaScript files, and the more files an HTML page has, the more time the browser will take to load the complete webpage. Before we can extract JavaScript and CSS files from web pages in Python, we need to install the required libraries.

The following section details how to do so.

Required Libraries

1) Python

requests

Library

Requests

is the de-facto Python library for HTTP requests. We will be using this library in this tutorial to send the get request to the webpage URL and get its HTML code.

To install requests for your Python environment, run the following pip install command on your terminal or command prompt:

pip install requests

2) Python

beautifulsoup4

Library

Beautifulsoup4 is an open-source Python library. It is generally used to pull out data from HTML and XML files. We will be using this library in our Python program to extract data from the URL HTML webpage.

You can install the

beautifulsoup4

library for your Python environment using the following Python pip install command:

pip install beautifulsoup4

After installing both the libraries, open your best Python IDE or text editor and code along.

How to Extract CSS Files from Web Pages in Python?

In an HTML file, the CSS can be embedded in two ways, internal CSS and external CSS . Let's write a Python program that will extract the internal as well as the external CSS from an HTML file. Let's start with importing the modules:

import requests

from bs4 import BeautifulSoup

Now, we will define a

Python user-defined function

page_Css(html_page)

that will accept html_page as an argument and extract all the internal CSS

<style>

code and external CSS

<link rel="stylesheet">

href links.

def page_Css(page_html):

#find all the external CSS style

external_css= page_html.find_all('link', rel="stylesheet")

#find all the intenal CSS style

internal_css =page_html.find_all('style')

#print the number of Internal and External CSS

print(f"{response.url} page has {len(external_css)} External CSS tags")

print(f"{response.url} page has {len(internal_css)} Internal CSS tags")

#write the Internal style CSS code in internal_css.css file

with open("internal_css.css", "w") as file:

for index, css_code in enumerate(internal_css):

file.write(f"\n //{index+1} Style\n")

file.write(css_code.string) #write code

#write the External style CSS links in external_css.txt file

with open("external_css.txt", "w") as file:

for index, css_tag in enumerate(external_css):

file.write(f"{css_tag.get('href')} \n") #write external css href links

print(index+1,"--------->", css_tag.get("href"))

-

The

find_all('link', rel="stylesheet")statement will return a list of all the external CSS <link> tags. -

The

find_all('style')method/function will return a list of all the internal<style>tags from the page_html. -

In the

with open("internal_css.css", "w") as file:block, we are writing all the internal CSS code in theinternal_css.cssfile. -

In the

with open("external_css.txt", "w") as file:block, we are writing the external CSS href links in theexternal_css.txtfile.

After defining the function, let's send a Get request to the webpage URL and call the page_Css() function.

#url of the web page

url = "https://www.techgeekbuzz.com/"

#send get request to the url

response = requests.get(url)

#parse the response HTML page

page_html = BeautifulSoup(response.text, 'html.parser')

#Extract CSS from the HTML page

page_Css(page_html)

The

request.get(url)

function will send a GET HTTP request to the url and return a response. The

BeautifulSoup()

module will parse the HTML page of the

response

. Now put all the code together and execute.

A Python Program to Extract Internal and External CSS from a Webpage

import requests

from bs4 import BeautifulSoup

def page_Css(page_html):

#find all the external CSS style

external_css= page_html.find_all('link', rel="stylesheet")

#find all the intenal CSS style

internal_css =page_html.find_all('style')

#print the number of Internal and External CSS

print(f"{response.url} page has {len(external_css)} External CSS tags")

print(f"{response.url} page has {len(internal_css)} Internal CSS tags")

#write the Internal style CSS in internal_css.css file

with open("internal_css.css", "w") as file:

for index, css_code in enumerate(internal_css):

file.write(f"\n //{index+1} Style\n")

file.write(css_code.string)

#write the External style CSS links in external_css.txt file

with open("external_css.txt", "w") as file:

for index, css_tag in enumerate(external_css):

file.write(f"{css_tag.get('href')} \n")

print(index+1,"--------->", css_tag.get("href"))

#url of the web page

url = "https://www.techgeekbuzz.com/"

#send get request to the url

response = requests.get(url)

#parse the response HTML page

page_html = BeautifulSoup(response.text, 'html.parser')

#Extract CSS from the HTML page

page_Css(page_html)

Output

https://www.techgeekbuzz.com/ page has 5 External CSS tags

https://www.techgeekbuzz.com/ page has 3 Internal CSS tags

1 ---------> https://secureservercdn.net/160.153.137.163/84g.4be.myftpupload.com/wp-includes/css/dist/block-library/style.min.css?ver=5.6&time=1612532286

2 ---------> https://fonts.googleapis.com/css?family=Ubuntu%3A400%2C700&subset=latin%2Clatin-ext

3 ---------> https://secureservercdn.net/160.153.137.163/84g.4be.myftpupload.com/wp-content/themes/iconic-one-pro/style.css?ver=5.6&time=1612532286

4 ---------> https://secureservercdn.net/160.153.137.163/84g.4be.myftpupload.com/wp-content/themes/iconic-one-pro/custom.css?ver=5.6&time=1612532286

5 ---------> https://secureservercdn.net/160.153.137.163/84g.4be.myftpupload.com/wp-content/themes/iconic-one-pro/fonts/font-awesome.min.css?ver=5.6&time=1612532286

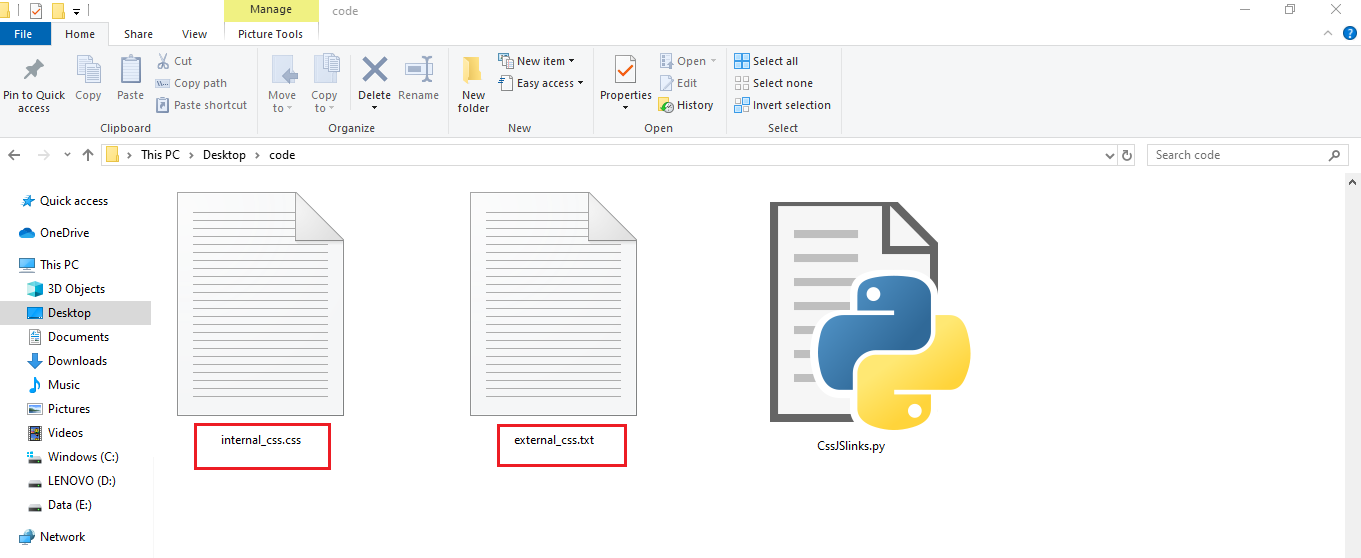

In the program, we have only printed the links for the external CSS. After executing the above program, you can check the directory where your Python Script is located. There, you will find two new files,

internal_css.css

and

external_css.txt

, which contain internal CSS code and external CSS links, respectively.

Next, let's write a similar Python program that will extract JavaScript from the webpage.

How to Extract JavaScript Files from Web Pages in Python?

Again we will start with importing the required modules.

import requests

from bs4 import BeautifulSoup

Now, lets add a user-defined function,

page_javaScript(page_html)

. It will extract internal and external JavaScript from the HTML webpage.

def page_javaScript(page_html):

#list all the scripts tags

all_script_tags = page_html.find_all("script")

#filtering Internal and External JavaScript of page_html

external_js = list(filter(lambda script:script.has_attr("src"), all_script_tags))

internal_js = list(filter(lambda script: not script.has_attr("src"), all_script_tags))

#print the number of Internal and External JavaScript

print(f"{response.url} page has {len(external_js)} External JS Files")

print(f"{response.url} page has {len(internal_js)} Internal JS Code")

#write internal JavaScript in internal_script.js file

with open("internal_script.js", "w") as file:

for index, js_code in enumerate(internal_js):

file.write(f"\n //{index+1} script\n")

file.write(js_code.string)

#write External JavaScript Source in external_script.txt file

with open("external_script.txt", "w") as file:

for index, script_tag in enumerate(external_js):

file.write(f"{script_tag.get('src')} \n")

print(index+1,"--------->", script_tag.get("src"))

-

The

page_html.find_all("script")statement will return a list of all JavaScript<script>tags present in thepage_html. -

list(filter(lambda script:script.has_attr("src"), all_script_tags))andlist(filter(lambda script: not script.has_attr("src"), all_script_tags))will filter the list of internal and external JavaScript using the Python lambda and filter functions . -

The

with open("internal_script.js", "w") as file:block will write the internal JavaScript code in the new fileinternal_script.js. -

The

with open("external_script.txt", "w") as file:block will write all the external JavaScript source links in theexternal_script.txtfile.

Now, we need to send the GET request to the page URL.

#url of the web page

url = "https://www.techgeekbuzz.com/"

#send get request to the url

response = requests.get(url)

#parse the response HTML page

page_html = BeautifulSoup(response.text, 'html.parser')

#extract JavaScript

page_javaScript(page_html)

Finally, put all the code together and execute.

A Python Program to Extract Internal and External JavaScript from a Webpage

import requests

from bs4 import BeautifulSoup

def page_javaScript(page_html):

#list all the scripts tags

all_script_tags = page_html.find_all("script")

#filtering Internal and External JavaScript of page_html

external_js = list(filter(lambda script:script.has_attr("src"), all_script_tags))

internal_js = list(filter(lambda script: not script.has_attr("src"), all_script_tags))

#print the number of Internal and External JavaScript

print(f"{response.url} page has {len(external_js)} External JS Files")

print(f"{response.url} page has {len(internal_js)} Internal JS Code")

#write internal JavaScript in internal_script.js file

with open("internal_script.js", "w") as file:

for index, js_code in enumerate(internal_js):

file.write(f"\n //{index+1} script\n")

file.write(js_code.string)

#write External JavaScript Source in external_script.txt file

with open("external_script.txt", "w") as file:

for index, script_tag in enumerate(external_js):

file.write(f"{script_tag.get('src')} \n")

print(index+1,"--------->", script_tag.get("src"))

#url of the web page

url = "https://www.techgeekbuzz.com/"

#send get request to the url

response = requests.get(url)

#parse the response HTML page

page_html = BeautifulSoup(response.text, 'html.parser')

#extract JavaScript

page_javaScript(page_html)

Output

https://www.techgeekbuzz.com/ page has 8 External JS Files

https://www.techgeekbuzz.com/ page has 6 Internal JS Code

1 ---------> //pagead2.googlesyndication.com/pagead/js/adsbygoogle.js

2 ---------> https://secureservercdn.net/160.153.137.163/84g.4be.myftpupload.com/wp-includes/js/jquery/jquery.min.js?ver=3.5.1&time=1612532286

3 ---------> https://secureservercdn.net/160.153.137.163/84g.4be.myftpupload.com/wp-includes/js/jquery/jquery-migrate.min.js?ver=3.3.2&time=1612532286

4 ---------> https://secureservercdn.net/160.153.137.163/84g.4be.myftpupload.com/wp-content/themes/iconic-one-pro/js/respond.min.js?ver=5.6&time=1612532286

5 ---------> https://www.googletagmanager.com/gtag/js?id=UA-132423771-1

6 ---------> https://secureservercdn.net/160.153.137.163/84g.4be.myftpupload.com/wp-content/themes/iconic-one-pro/js/selectnav.js?ver=5.6&time=1612532286

7 ---------> https://secureservercdn.net/160.153.137.163/84g.4be.myftpupload.com/wp-includes/js/wp-embed.min.js?ver=5.6&time=1612532286

8 ---------> https://img1.wsimg.com/tcc/tcc_l.combined.1.0.6.min.js

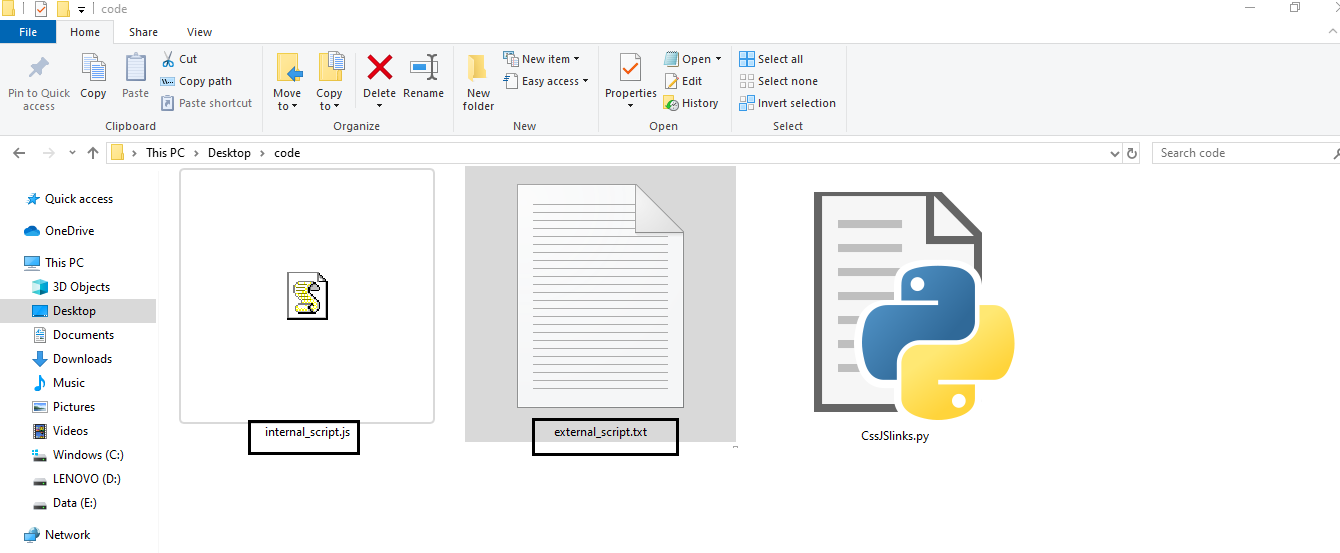

In the program, we have only printed the webpage external JavaScript source link. After executing the program you can also check your Python script directory and look for the newly created

internal_script.js

and

external_script.js

files that contain the webpage's internal JavaScript code and external JavaScript links, respectively.

Conclusion

In this tutorial, you learned how to extract JavaScript and CSS files from web pages in Python. To extract the CSS and JavaScript files, we have used web scrapping using Python requests and beautifulsoup4 libraries.

Before writing the above Python programs, make sure that you have installed both the libraries for your Python environment.

People are also reading:

Leave a Comment on this Post